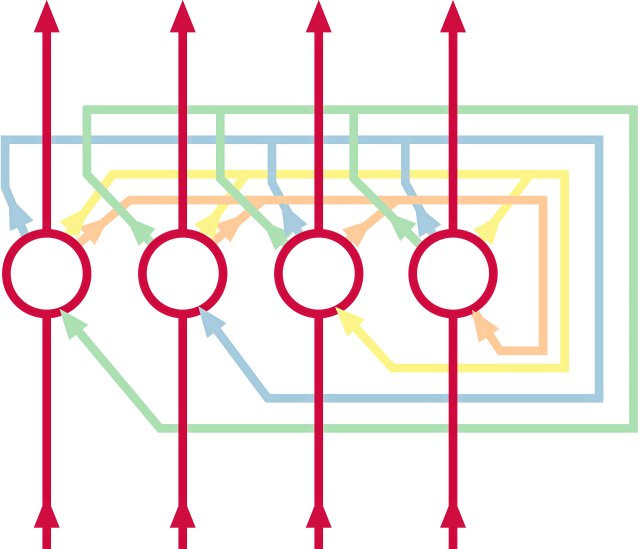

Schematic representation of a Hopfield network

Photo: nd

There is currently a revolution in information technology. Large language models such as ChatGPT, as well as translation software and other everyday applications, have now become so good that they can be used for many practical purposes. New artificial intelligence methods are also being used in medical diagnostics, material sciences, sociology, literary studies, the energy industry and in many areas in which large amounts of data have to be sifted through and processed. However, these software packages only deliver meaningful results if they are prepared for their use in a sensible manner with good training data.

John Hopfield and Geoffrey Hinton will receive this year’s Nobel Prize in Physics for their fundamental work on these processes. The two scientists had been working in this then young field since the 1980s. Approaches to machine learning had already existed since the 1940s – with the emergence of the first “electronic brains”. But it was only with the increasing computing power and storage capacity of computers that the possibility of using larger amounts of data for machine learning arose.

Artificial intelligence is inspired by the neuronal circuitry of biological brain cells. Classic computer technology is characterized by strict processing of commands that are specified by the program code. Biological systems, on the other hand, learn by exchanging information with their environment. The neurons in the brain and central nervous system work in parallel and in different layers. Learning occurs when certain connections between neurons in different layers gain a stronger influence, while others are weakened.

Informative hilly landscape

Machine learning takes this type of information processing as its model: First, an interconnection structure is specified, and then this structure “learns” based on example data. The input data is fed into the network and the system’s initially poor response is successively optimized by learning algorithms adapting the connections between the various artificial neurons as cleverly as possible.

To this end, the two laureates developed new ideas that continue to enrich the field today. The American John Hopfield of Princeton University had already conducted research on magnetic systems and molecular biology questions and had gained a reputation as an outstanding theorist when he came across brain research at a meeting on neuroscience. In 1982, he proposed a method for artificial neural networks to develop a type of associative memory. He chose a learning principle that is inspired by a so-called potential landscape, which occurs in many places in physics. You can easily illustrate it with a mountain landscape in which a ball always seeks the direct path downwards – i.e. to a state with as little energy as possible.

Translated into machine learning, this means: If a pixel of a training image matches an already saved image, then the energy of the corresponding node and network path is reduced – the landscape “erodes” in the desired direction, so to speak. If the training image does not match, the search continues. In this way, after successful training, a landscape – or a “hilly energy landscape” – is formed, so to speak, which shows future balls the right path to the target. This data structure is called the “Hopfield network” in his honor.

This allows you to save images without having to save each individual image: If you have several similar images, then the trained energy landscape is sufficient to not only save the images, but can even differentiate between the images.

The Briton Geoffrey Hinton from the University of Toronto worked with such Hopfield networks in the following years and developed them further. In particular, he created a so-called “Boltzmann machine” that differed from Hopfield’s original approach. The goal of his networks was not just to store data and to associate it with data that was already known. He wanted to see whether such networks could also be used to structure information so that they could be used to recognize patterns.

To do this, Hinton used methods of statistical physics and in particular an equation by Ludwig Boltzmann, who helped create the foundations of thermodynamics in the 19th century. According to this equation, depending on the energy level, some states are more likely than others. Using this principle, Hinton created a network system consisting of several layers and two different types of nodes – “visible” nodes and “invisible” nodes. The visible nodes bring information from outside into the network and carry the processed information back out. The invisible nodes ensure appropriate information processing within the system.

With this structure, Hinton had already created a so-called “generative model” that is also used by today’s AI systems to recognize patterns and generate new data based on known patterns. A Boltzmann machine can recognize certain things in images, even if it has never “seen” that particular image before. This makes the Boltzmann machine a crucial forerunner of current AI systems.

However, the original Boltzmann machine was not very practical for carrying out more complex tasks. While many researchers had lost interest in the entire field, Hinton continued to pursue it and in 2006, together with colleagues, introduced a number of exciting new ideas. These included pre-trained networks with a whole series of Boltzmann machines arranged one above the other at different levels. This increased the training efficiency and performance of the entire system enormously. Today’s complex AI systems are mostly so-called “deep neural networks” that consist of many layers of artificial neurons.

From physics to computer science

Following the Nobel Prize announcement, Hinton appeared astonished and even “flattered” by the honor in a telephone conversation. In fact, machine learning and artificial intelligence are areas that do not belong to the classic core disciplines of physics, but are now more likely to be classified as computer science. However, their origin lies in physics, or rather in the physics institutes that drove the development of computer technology and software. Today, physicists, computer scientists and mathematicians often work together to further develop these technologies.

For many years, it was particularly the data-hungry large research institutions in physics where machine learning was used – from particle physics to astrophysics. Thanks to the methods developed in the process, this area has grown rapidly over the last decade – with such dynamism that people have to worry about the energy supply to data centers. Training artificial intelligence systems requires huge amounts of data that have to be processed in large data centers with a corresponding hunger for electricity, which will hopefully be fed from green sources in the future.

In any case, this year’s Nobel Prize in Physics shows how decades of basic research can have a massive impact on modern society. The new laureates stand in a certain row with other researchers who have made today’s information age possible and who have also been awarded the prestigious prize, for example in 1956 for the transistor, in 2000 for the development of the integrated circuit, and in 2007 for the development the giant magnetoresistance that made modern magnetic hard drives possible and in 2009 for work on fiber optics that made today’s data networks possible.

Without all this groundbreaking research, today’s world would be hard to imagine – and in a few years the same will be said about the findings of the new laureates.

Subscribe to the “nd”

Being left is complicated.

We keep track!

With our digital promotional subscription you can read all issues of »nd« digitally (nd.App or nd.Epaper) for little money at home or on the go.

Subscribe now!